您现在的位置是:网站首页>>Linux运维Linux运维

基于K8S实现企业常用项目架构(K8S集群部署篇)

发布时间:2018-11-30 20:11:21分类:Linux运维阅读:1427

一、环境准备

二、K8S集群master节点部署

三、node节点部署(k8s-node01和k8s-node02都执行)

四、Ingress-nginx部署

五、部署nfs服务器(k8s-stor01主机上执行)

六、部署helm环境,用于后面使用helm部署redis高可用集群

七、使用helm部署redis高可用集群

八、部署tomcat应用

九、使用Ingress-nginx作为集群入口代理tomcat

一、准备环境

1、K8S集群主机规划

|

主机名 |

ip |

应用 |

说明 |

|

k8s-master |

192.168.1.11 |

k8s-apiserver, k8s-scheduler, k8s-control-manager, kubectl, flannel, coreDns |

k8s master |

|

k8s-node01 |

192.168.1.21 |

kubelet, kube-proxy, flannel, coreDns |

k8s nodes |

|

k8s-node02 |

192.168.1.22 |

|

|

|

k8s-stor01 |

192.168.1.51 |

nfs |

NFS存储,用于pv的存储基础设施 |

2、使用vagrant创建虚拟主机

3、时区设置、时间同步

4、K8S集群内主机之间通过密钥登录

5、配置hosts解析,并同步K8S集群内主机hosts文件

6、准备yum仓库(k8s集群内主机同步yum仓库)

阿里云:https://mirrors.aliyun.com

gpgkey:https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

[root@k8s-master yum.repos.d]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

7、k8s集群内主机同步yum仓库

注意:初始环境准备好了,vagrant建个快照备份下:

$ vagrant.exe snapshot save k8s-master base-env

$ vagrant.exe snapshot save k8s-node01 base-env

$ vagrant.exe snapshot save k8s-node02 base-env

$

vagrant.exe snapshot save k8s-stor01 base-env

二、K8S集群master节点部署

1、查看软件包版本

[root@k8s-master ~]# yum list docker-ce kubelet kubeadm kubectl --showduplicates

2、安装指定版本的软件

[root@k8s-master ~]# yum install docker-ce-17.12.1.ce kubelet-1.11.4 kubectl-1.11.4 kubeadm-1.11.4

3、配置docker使用国内镜像registry

[root@k8s-master ~]# vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd --registry-mirror=https://registry.docker-cn.com

4、设置开机启动服务

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable docker

[root@k8s-master ~]# systemctl enable

kubelet

5、启动docker服务,并查看docker服务信息

[root@k8s-master ~]# systemctl start docker

[root@k8s-master ~]# docker info # 使用了国内镜像registry

Registry Mirrors:

https://registry.docker-cn.com/

Live Restore Enabled: false

6、kubelet配置文件:

[root@k8s-master ~]# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false" # 忽略系统中启用了swap时k8s的报错

7、使用kubeadm初始化kubenetes的master节点(由于网络限制,不能直接获取*gcr.io等仓库的镜像,因此先提前准备镜像)

[root@k8s-master ~]# docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.11.4

[root@k8s-master ~]# docker tag mirrorgooglecontainers/kube-apiserver-amd64:v1.11.4 k8s.gcr.io/kube-apiserver-amd64:v1.11.4

[root@k8s-master ~]# docker pull mirrorgooglecontainers/kube-controller-manager-amd64:v1.11.4

[root@k8s-master

~]# docker tag mirrorgooglecontainers/kube-controller-manager-amd64:v1.11.4

k8s.gcr.io/kube-controller-manager-amd64:v1.11.4

[root@k8s-master ~]# docker pull mirrorgooglecontainers/kube-scheduler-amd64:v1.11.4

[root@k8s-master

~]# docker tag mirrorgooglecontainers/kube-scheduler-amd64:v1.11.4

k8s.gcr.io/kube-scheduler-amd64:v1.11.4

[root@k8s-master ~]# docker pull coredns/coredns:1.1.3

[root@k8s-master

~]# docker tag coredns/coredns:1.1.3 k8s.gcr.io/coredns:1.1.3

[root@k8s-master ~]# docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.11.4

[root@k8s-master

~]# docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.11.4 k8s.gcr.io/kube-proxy-amd64:v1.11.4

[root@k8s-master ~]# docker pull mirrorgooglecontainers/pause:3.1

[root@k8s-master ~]# docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

[root@k8s-master ~]# docker pull mirrorgooglecontainers/etcd-amd64:3.2.18

[root@k8s-master ~]# docker tag mirrorgooglecontainers/etcd-amd64:3.2.18 k8s.gcr.io/etcd-amd64:3.2.18

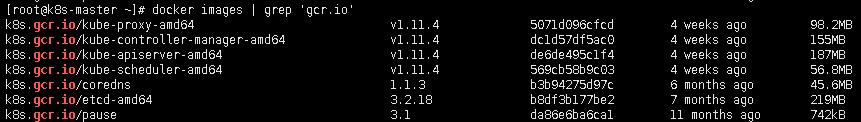

[root@k8s-master ~]# docker images | grep 'gcr.io' # 查看拉取的镜像是否正确

8、使用kubeadm初始化kubenetes的master节点

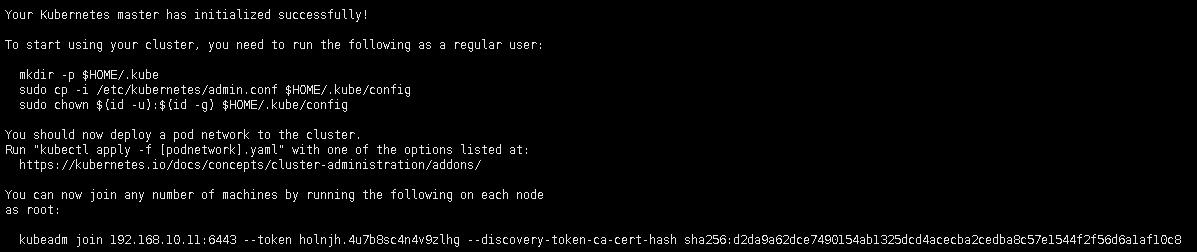

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=192.168.10.11 --kubernetes-version=v1.11.4 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

kubeadm join 192.168.10.11:6443 --token holnjh.4u7b8sc4n4v9zlhg --discovery-token-ca-cert-hash sha256:d2da9a62dce7490154ab1325dcd4acecba2cedba8c57e1544f2f56d6a1af10c8

9、设置k8s集群api-server的命令行客户端kubectl工具的默认配置信息

[root@k8s-master ~]# mkdir -p $HOME/.kube

[root@k8s-master ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

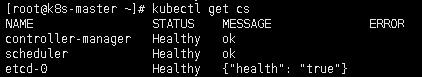

10、使用kubectl工具检查集群节点组件状态

[root@k8s-master ~]# kubectl get cs #ComponentStatus组件状态

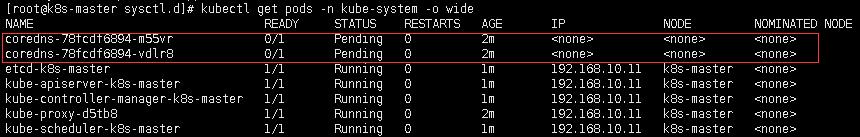

[root@k8s-master sysctl.d]# kubectl get pods -n kube-system -o wide # 还没有部署网络,因此dns还没正常运行

11、部署集群网络附件flannel

参考官网:

https://github.com/coreos/flannel/

https://kubernetes.io/docs/getting-started-guides/kubeadm/

[root@k8s-master manifests]# pwd

/opt/manifests

[root@k8s-master manifests]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

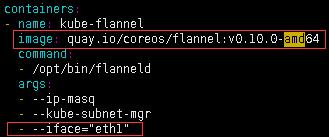

由于flannel默认使用主机默认路由的接口(eth0)作为主机间通信的接口,而本集群环境使用vagrant创建,默认路由接口(eth0)不能作为虚拟主机间通信用,而使用附加的另一个网卡接口(eth1),因此需要修改flannel的清单文件,指定主机间可通信的网络接口(eth1)。

[root@k8s-master manifests]# vim kube-flannel.yml #添加flanneld启动选项(注意容器环境image是amd64的这个)

[root@k8s-master manifests]# kubectl apply -f kube-flannel.yml

[root@k8s-master ~]# kubectl get pods -n kube-system -o wide | grep flannel

![]()

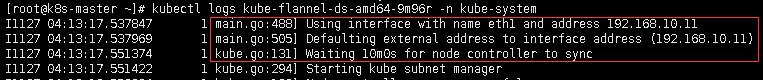

[root@k8s-master ~]# kubectl logs kube-flannel-ds-amd64-9m96r -n kube-system

12、查看当前master节点的运行的资源及相关信息(-n kube-system名称空间,-o wide以宽格式显示信息)

[root@k8s-master ~]# kubectl get all -n kube-system -o wide

三、node节点部署(k8s-node01和k8s-node02都执行)

1、安装软件

[root@k8s-node01 ~]# yum install docker-ce-17.12.1.ce kubelet-1.11.4 kubeadm-1.11.4

2、同步master节点的docker及kubelet配置到node节点

[root@k8s-master ~]# scp /usr/lib/systemd/system/docker.service k8s-node01:/usr/lib/systemd/system/docker.service

[root@k8s-master ~]# scp /usr/lib/systemd/system/docker.service k8s-node02:/usr/lib/systemd/system/docker.service

[root@k8s-master ~]# scp /etc/sysconfig/kubelet k8s-node01:/etc/sysconfig/kubelet

[root@k8s-master ~]# scp /etc/sysconfig/kubelet k8s-node02:/etc/sysconfig/kubelet

3、设置开机启动docker及kubelet

[root@k8s-node01 ~]# systemctl daemon-reload

[root@k8s-node01 ~]# systemctl enable docker.service

[root@k8s-node01 ~]# systemctl enable kubelet.service

4、启动docker服务

[root@k8s-node01 ~]# systemctl start docker.service

5、将node节点加入k8s集群(因为国内网络原因,不能直接拉取*.gcr.io的镜像文件,因此先提前准备相应镜像)

[root@k8s-node01 ~]# docker pull mirrorgooglecontainers/pause:3.1

[root@k8s-node01 ~]# docker tag mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

[root@k8s-node01 ~]# docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.11.4

[root@k8s-node01

~]# docker tag mirrorgooglecontainers/kube-proxy-amd64:v1.11.4

k8s.gcr.io/kube-proxy-amd64:v1.11.4

6、将node节点加入k8s集群

[root@k8s-node01 ~]# kubeadm join 192.168.10.11:6443 --token holnjh.4u7b8sc4n4v9zlhg --discovery-token-ca-cert-hash sha256:d2da9a62dce7490154ab1325dcd4acecba2cedba8c57e1544f2f56d6a1af10c8 --ignore-preflight-errors=Swap

7、在k8s-node02上执行相同的操作完成node节点添加。

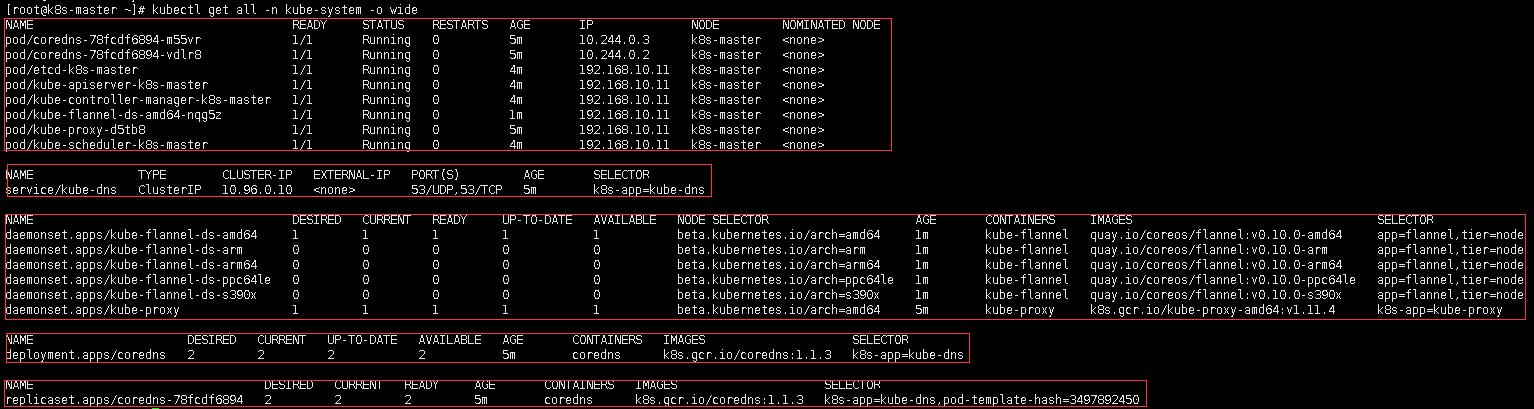

8、在master上查看k8s集群节点状态,发现k8s-node01和k8s-node02节点添加成功

[root@k8s-master ~]# kubectl get nodes -o wide

9、最后查看整个集群的部署情况

[root@k8s-master ~]# kubectl get all -n kube-system -o wide

四、Ingress-nginx部署

参考官方文档:https://github.com/kubernetes/ingress-nginx/blob/master/docs/deploy/index.md

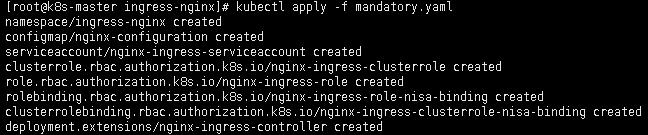

1、创建ingress-nginx使用的Namespace、ConfigMap、ServiceAccount、ClusterRole及绑定ClusterRoleBinding、Role及绑定RoleBinding、Deployment

[root@k8s-master ingress-nginx]# pwd

/opt/manifests/ingress-nginx

[root@k8s-master ingress-nginx]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

[root@k8s-master ingress-nginx]# kubectl apply -f mandatory.yaml

其中Deployment用到镜像quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.21.0

2、创建Service来代理Deployment创建的Pods对象

[root@k8s-master ingress-nginx]# wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/provider/baremetal/service-nodeport.yaml

[root@k8s-master ingress-nginx]# kubectl apply -f service-nodeport.yaml

![]()

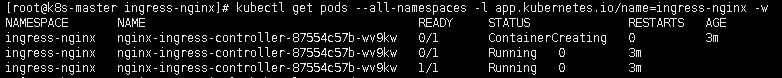

3、验证安装(检查ingress-nginx的Pod是否运行)

[root@k8s-master ingress-nginx]# kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx -w

4、查看ingress-nginx名称空间中所有的资源情况

[root@k8s-master ~]# kubectl get all -n ingress-nginx

五、部署nfs服务器(k8s-stor01主机上执行)

1、安装nfs软件包:

[root@k8s-stor01 ~]# yum install vim nfs-utils -y

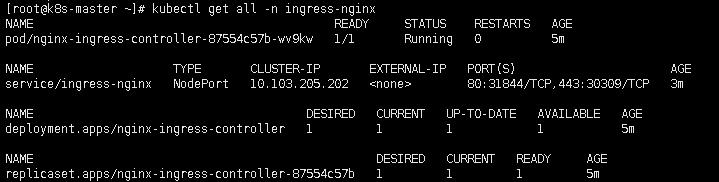

2、设置NFS服务器

[root@k8s-stor01 ~]# mkdir -p /data/nfs/volume_{01..03}

[root@k8s-stor01 ~]# chmod 777 /data/nfs/volume_0* # 挂载使用要有写权限

[root@k8s-stor01 ~]# vim /etc/exports

[root@k8s-stor01 ~]# systemctl daemon-reload

[root@k8s-stor01 ~]# systemctl enable nfs.service

[root@k8s-stor01 ~]# systemctl start nfs.service

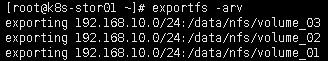

[root@k8s-stor01 ~]# exportfs -arv

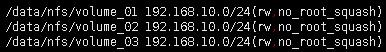

3、测试挂载nfs

[root@k8s-master ~]# mount -t nfs k8s-stor01:/data/nfs/volume_01 /mnt

[root@k8s-master ~]# df -Th | grep mnt

![]()

4、在k8s集群中将NFS存储设备定义为PV

[root@k8s-master manifests]# pwd

/opt/manifests

[root@k8s-master

manifests]# vim k8s-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

labels:

name: pv01

spec:

nfs:

server: k8s-stor01

path: /data/nfs/volume_01

accessModes: ["ReadWriteMany", "ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02

labels:

name: pv02

spec:

nfs:

server: k8s-stor01

path: /data/nfs/volume_02

accessModes: ["ReadWriteMany", "ReadWriteOnce"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03

labels:

name: pv03

spec:

nfs:

server: k8s-stor01

path: /data/nfs/volume_03

accessModes: ["ReadWriteMany", "ReadWriteOnce"]

capacity:

storage: 10Gi

---

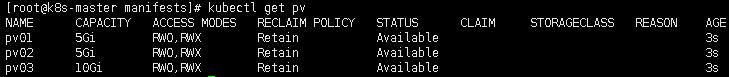

[root@k8s-master manifests]# kubectl apply -f k8s-pv.yaml

[root@k8s-master manifests]# kubectl get pv

六、部署helm环境,用于后面使用helm部署redis高可用集群

1、安装helm客户端工具

[root@master ~]# wget https://storage.googleapis.com/kubernetes-helm/helm-v2.9.1-linux-amd64.tar.gz

[root@master ~]# tar -xvf helm-v2.9.1-linux-amd64.tar.gz

[root@master ~]# cd linux-amd64/

[root@master linux-amd64]# cp helm /usr/bin/

2、使用helm客户端部署helm管理服务Tiller

Tiller需要集群管理权限,用于对应用的安装、卸载等管理。

创建serviveaccount并绑定到cluster-admin的role上:

RBAC配置文件示例:https://github.com/helm/helm/blob/release-2.9/docs/rbac.md

(1) 创建serviceaccount并授权

[root@master helm]# pwd

/root/manifests/helm

[root@master helm]# vim tiller-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

[root@master helm]# kubectl apply -f tiller-rbac.yaml

![]()

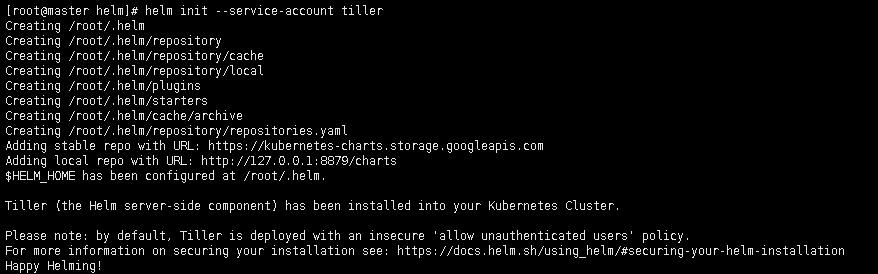

(2) helm初始化安装tiller

提前准备好镜像:

[root@node01 ~]# docker search tiller:v2.9.1

[root@node01 ~]# docker pull tlwtlw/tiller:v2.9.1

[root@node01

~]# docker tag tlwtlw/tiller:v2.9.1 gcr.io/kubernetes-helm/tiller:v2.9.1

[root@master helm]# helm init --service-account tiller # 默认使用~/.kube/config配置文件作为调用k8s Api Server的context文件

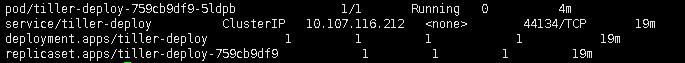

[root@master ~]# kubectl get all -n kube-system

| grep tiller | grep -v '^$'

[root@master

~]# helm version # 查看helm客户端与服务端版本信息

![]()

相关文章:

基于K8S实现企业常用项目架构(项目部署篇)